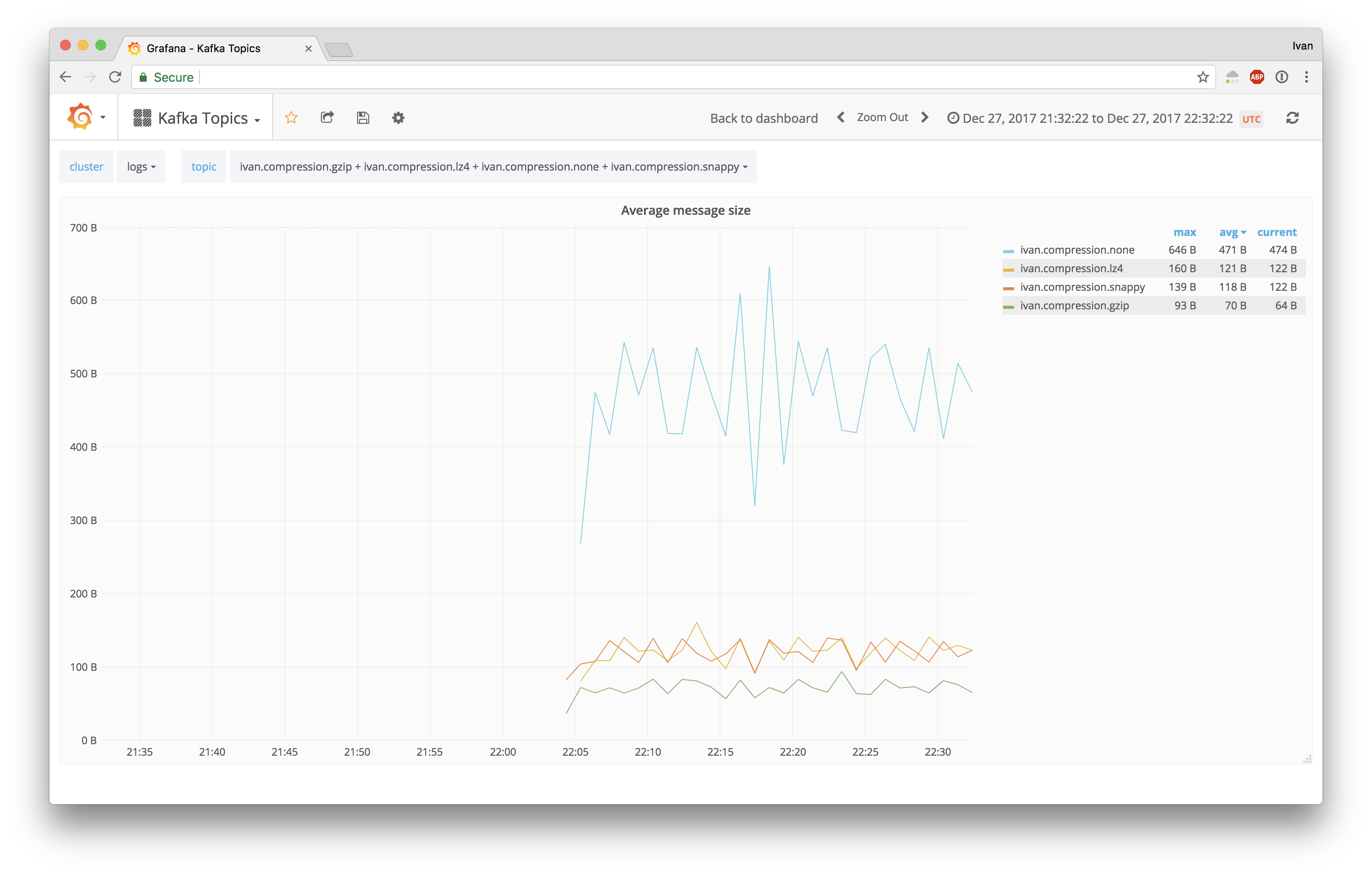

But I am seeing java.io.IOException: FAILED_TO_UNCOMPRESS(5) error from snappy and .KafkaException: Failed to decompress record streamĬonsumer.java BatchEventProcessor = "MyAckTopic",ĬontainerFactory = "kafkaListenerContainerFactory")ĮventProcessor.process(message.getPayload()) ĬonsumerConfig. If you override the kafka-clients jar to 2.1. Kafka lets you compress your messages as they travel over the wire. Valid values are none, gzip, snappy, lz4, or zstd. kafka-python is best used with newer brokers (0.9+), but is backwards-compatible with. sprinkling of pythonic interfaces (e.g., consumer iterators). kafka-python is designed to function much like the official java client, with a. The producer throughput with Snappy compression was roughly 60.8MB/s as compared to 18.5MB/s of the GZIP producer. The compression type for all data generated by the producer. Python client for the Apache Kafka distributed stream processing system. When using kafka, I can set a codec by setting the property of my kafka producer.

The batch will only be decompressed by the consumer. A batch of messages can be compressed and sent to the server, and this batch of messagess is written in compressed form and will remain compressed in the log.

#Kafka streams enable snappy compression code#

I am trying to consume messages from kafka using spring-kafka using code below. In this mode, the data gets compressed at the producer and it doesn’t wait for the ack from the broker. Kafka supports the compression of batches of messages with an efficient batching format.

0 kommentar(er)

0 kommentar(er)